Data over Space and Time (36-467/667)

Fall 2018

If you are looking for another iteration of this class, perhaps because you

are taking it, see here

Cosma Shalizi

Tuesdays and Thursdays, 10:30--11:50, Posner Mellon Auditorium

This course is an introduction to the opportunities and challenges of

analyzing data from processes unfolding over space and time. It will cover

basic descriptive statistics for spatial and temporal patterns; linear methods

for interpolating, extrapolating, and smoothing spatio-temporal data; basic

nonlinear modeling; and statistical inference with dependent observations.

Class work will combine practical exercises in R, some mathematics of the

underlying theory, and case studies analyzing real data from various fields

(economics, history, meteorology, ecology, etc.). Depending on available time

and class interest, additional topics may include: statistics of Markov and

hidden-Markov (state-space) models; statistics of point processes; simulation

and simulation-based inference; agent-based modeling; dynamical systems theory.

Co-requisite: For undergraduates taking the course as

36-467, 36-401. For

graduate students taking the course as 36-667, consent of the professor.

Note: Graduate students must register for the

course as 36-667; if the system does let you sign up for 36-467, you will be

dropped from the roster. Undergraduates (whether statistics majors or not)

must register for 36-467.

This webpage will serve as the class syllabus. Course materials (notes,

homework assignments, etc.) will be posted here, as available.

Goals and Learning Outcomes

(Accreditation officials look here)

The goal of this class is to train you in using statistical models to

analyze interdependent data spread out over space, time, or both,

using the models as data summaries, as predictive instruments, and as tools for

scientific inference. We will build on the theory of statistical inference for

independent data taught in 36-226, and complement the theory and applications

of the linear model, introduced

in 36-401. After taking

the class, when you're faced with a new temporal, spatial, or spatio-temporal

data-analysis problem, you should be able to (1) select appropriate methods,

(2) use statistical software to implement them, (3) critically evaluate the

resulting statistical models, and (4) communicate the results of your analyses

to collaborators and to non-statisticians.

Topics

- Exploratory data analysis for temporal and spatial data:

Graphics; smoothing; trends and detrending; auto- and cross- covariances;

nonlinear association measures; Fourier analysis

- Optimal linear prediction and its uses: Theory of optimal

linear prediction; prediction for interpolation, extrapolation, and noise

removal; "Wiener filter"; "krgiging"; estimating optimal linear predictors

- Inference with dependent data: Statistical estimation

with dependent data; ergodic properties; the bootstrap; simulation-based

inference

- Generative models: Linear autoregressive models for time

series and spatial processes; Markov chains and Markov processes; compartment

(especially epidemic) models; state-space or hidden-Markov models;

nonparametric, nonlinear autoregressions; Markov random fields; cellular

automata and interacting particle systems

- Possible advanced topics: Point processes; nonlinear

dynamical systems theory and chaos; agent-based modeling; causal inference

across time series; stochastic differential equations; optimal nonlinear

prediction.

This class will not give much coverage to ARIMA models of time

series, a subject treated extensively in 36-618.

Course Mechanics

Textbooks

The only required textbook is

Gidon

Eshel, Spatiotemporal

Data Analysis (Princeton, New Jersey: Princeton University Press,

2011, ISBN 978-0-691-12891-7, available

on JSTOR).

The CMU library

has electronic access to the

full text, in PDF, through the JSTOR service. (You will need to either be

on campus, or logged in to the university library.) Links to individual

chapters will be posted as appropriate.

In addition, we will assign some sections from

Peter Guttorp, Stochastic Modeling of Scientific Data

( Boca Raton, Florida: Chapman & Hall / CRC Press, 1995. ISBN

978-0-412-99281-0).

Because this book is expensive, the library doesn't have electronic access, and

a lot of it is about (interesting and important) topics outside the scope of

the class, it is not required. Instead, scans of the appropriate sections will

be distributed via Canvas.

You will also be doing a lot of computational work in R, so

Paul Teetor, The R Cookbook

(O'Reilly Media, 2011,

ISBN 978-0-596-80915-7)

is recommended. R's help files answer "What does command X

do?" questions. This book is organized to answer "What commands do I use to do

Y?" questions.

Assignments

There are three reasons you will get assignments in this course. In order of

decreasing importance:

- Practice. Practice is essential to developing the skills you are

learning in this class. It also actually helps you learn, because some things

which seem murky clarify when you actually do them, and sometimes trying to do

something shows what you only thought you understood.

- Feedback. By seeing what you can and cannot do, and what comes

easily and what you struggle with, I can help you learn better, by giving

advice and, if need be, adjusting the course.

- Evaluation. The university is, in the end, going to stake its

reputation (and that of its faculty) on assuring the world that you have

mastered the skills and learned the material that goes with your degree.

Before doing that, it requires an assessment of how well you have, in fact,

mastered the material and skills being taught in this course.

To serve these goals, there will be three kinds of assignment in this

course.

- In-class exercises

- Most lectures will have in-class exercises. These will be short (10--20

minutes) assignments, emphasizing problem solving, done in class in small

groups. The assignments will be given out in class, and must be handed in on

paper by the end of class. On some days, a randomly-selected group may be

asked to present their solution to the class.

- Homework

- Most weeks will have a homework assignment, divided into a series of

questions or problems. These will have a common theme, and will usually build

on each other, but different problems may involve statistical theory, analyzing

real data sets on the computer, and communicating the results. The in-class

exercises will either be problems from that week's homework, or close enough

that seeing how to do the exercise should tell you how to do some of the

problems.

- All homework will be submitted electronically through Canvas. Most weeks,

homework will be due at 6:00 pm on Wednesday. There will be a

few weeks, clearly noted on the syllabus and on the assignments, when Thursday

lecture will be canceled and homework will be due at noon on Thursday, i.e.,

the end of the lecture period. (When this means that there are only six days

for the next homework, it will be shortened accordingly.)

- There are specific formatting requirements for

homework --- see below.

- Exams

- There will be both a midterm and a final

exam. Each of these will require you to analyze a real-world data

set, answering questions posed about it in the exam, and to write up your

analysis in the form of a scientific report. The exam assignments will provide

the data set, the specific questions, and a rubric for your report.

- Both exams will be take-home, and you will have at least one week to

work on each, without homework (from this class anyway). Both exams will

be cumulative.

- Exams are to be submitted through Canvas, and follow the

same formatting requirements as the homework --- see

below.

The mid-term will be due on October 11, and the final on December 14. If

you might have a conflict with these dates, contact me as soon as possible.

Grading

Grades will be broken down as follows:

- Exercises: 15%. All exercises will have equal weight.

- Homework: 40%. There will be 12 homeworks, all of equal weight. Your

lowest two homework grades will be dropped, no questions asked. If you turn in

all homework assignments (on time), your lowest three homework grades

will be dropped. Late homework will not be accepted for any

reason.

- Midterm: 20%

- Final: 25%

Grade boundaries will be as follows:

| A | [90, 100] |

| B | [80, 90) |

| C | [70, 80) |

| D | [60, 70) |

| R | < 60 |

To be fair to everyone, these boundaries will be held to strictly.

If you think that particular assignment was wrongly graded, tell me as soon as possible. Direct any questions or complaints about your grades to me; the teaching assistants have no authority to make changes. (This also goes for your final letter grade.) Complaints that the thresholds for letter grades are unfair, that you deserve a higher grade, etc., will accomplish much less than pointing to concrete problems in the grading of specific assignments.

As a final word of advice about grading, "what is the least amount of work I need to do in order to get the grade I want?" is a much worse way to approach higher education than "how can I learn the most from this class and from my teachers?".

Lectures

Lectures will be used to amplify the readings, provide examples and demos, and answer questions and generally discuss the material. They are also when you will do the in-class assignments which will help with your homework, and are part of your grade.

You are expected to do the readings before coming to class.

Do not use any electronic devices during lecture: no laptops, no tablets, no phones, no watches that do more than tell time. If you need to use an electronic assistive device, please make arrangements with me beforehand. (Experiments show,

pretty clearly, that students learn more in

electronics-free classrooms, not least because your device isn't distracting

your neighbors.)

Office Hours

If you want help with computing, please bring a laptop.

| Monday | 2:30--3:30 | Mr. Elliott | Baker Hall 229A for 10 September, Porter Hall A20A |

| Tuesday | 3:00--4:00 | Mr. Elliott | Baker Hall 132Q |

| Wednesday | 1:00--3:00 | Prof. Shalizi | Baker Hall 229C |

If you cannot make the regular office hours, or have concerns you'd rather

discuss privately, please e-mail me about making an appointment.

R, R Markdown, and Reproducibility

R is a free, open-source software package/programming language for statistical computing. You should have begun to learn it in 36-401 (if not before). No other form of computational work will be accepted. If you are not able to use R, or do not have ready, reliable access to a computer on which you can do so, let me know at once.

Communicating your results to others is as important as getting good results in the first place. Every homework assignment will require you to write about that week's data analysis and what you learned from it; this writing is part of the assignment and will be graded. Raw computer output and R code is not acceptable; your document must be humanly readable.

All homework and exam assignments are to be written up in R Markdown. (If you know what knitr is and would rather use it, ask first.) R Markdown is a system that lets you embed R code, and its output, into a single document. This helps ensure that your work is reproducible, meaning that other people can re-do your analysis and get the same results. It also helps ensure that what you report in your text and figures really is the result of your code. For help on using R Markdown, see "Using R Markdown for Class Reports".

For each assignment, you should submit two, and only two, files: an R Markdown source file, integrating text, generated figures and R code, and the "knitted", humanly-readable document, in either PDF (preferred) or HTML format. (I cannot read Word files, and you will lose points if you submit them.) I will be re-running the R Markdown file of randomly selected students; you should expect to be picked for this about once in the semester. You will lose points if your R Markdown file does not, in fact, generate your knitted file (making obvious allowances for random numbers, etc.).

Some problems in the homework will require you to do math. R Markdown provides a simple but powerful system for type-setting math. (It's based on the LaTeX document-preparation system widely used in the sciences.) If you can't get it to work, you can hand-write the math and include scans or photos of your writing in the appropriate places in your R Markdown document. You will, however, lose points for doing so, starting with no penalty for homework 1, and growing to a 90% penalty (for those problems) by homework 12.

Canvas and Piazza

Homework and exams will be submitted electronically through Canvas, which will

also be used as the gradebook. Some readings and course materials will

also be distributed through Canvas.

We will be using the Piazza website for question-answering. You will

receive an invitation within the first week of class.

Anonymous-to-other-students posting of questions and replies will be allowed,

at least initially. Anonymity will go away for everyone if it is abused.

Collaboration, Cheating and Plagiarism

Except for explicit group exercises,

everything you turn in for a grade must be your own work, or a clearly

acknowledged borrowing from an approved source; this includes all mathematical

derivations, computer code and output, figures, and text. Any use of permitted

sources must be clearly acknowledged in your work, with citations letting the

reader verify your source. You are free to consult the textbook and

recommended class texts, lecture slides and demos, any resources provided

through the class website, solutions provided to this semester's

previous assignments in this course, books and papers in the library, or

legitimate online resources, though again, all use of these sources must be

acknowledged in your work. (Websites which compile course materials

are not legitimate online resources.)

In general, you are free to discuss homework with other students in the

class, though not to share work; such conversations must be acknowledged in

your assignments. You may not discuss the content of assignments with

anyone other than current students or the instructors until after the

assignments are due. (Exceptions can be made, with prior permission, for

approved tutors.) You are, naturally, free to complain, in general terms,

about any aspect of the course, to whomever you like.

During the take-home exams, you are not allowed to discuss the content of

the exams with anyone other than the instructors; in particular, you may

not discuss the content of the exam with other students in the course.

Any use of solutions provided for any assignment in this course in previous

years is strictly prohibited, both for homework and for exams. This

prohibition applies even to students who are re-taking the course. Do not copy

the old solutions (in whole or in part), do not "consult" them, do not read

them, do not ask your friend who took the course last year if they "happen to

remember" or "can give you a hint". Doing any of these things, or anything

like these things, is cheating, it is easily detected cheating, and those who

thought they could get away with it in the past have failed the course.

If you are unsure about what is or is not appropriate, please ask me before

submitting anything; there will be no penalty for asking. If you do violate

these policies but then think better of it, it is your responsibility to tell

me as soon as possible to discuss how your mis-deeds might be rectified.

Otherwise, violations of any sort will lead to severe, formal disciplinary

action, under the terms of the university's

policy

on academic integrity.

On the first day of class, every student will receive a written copy of the

university's policy on academic integrity, a written copy of these course

policies, and a "homework 0" on the content of these policies. This assignment

will not factor into your grade, but you must complete it before you

can get any credit for any other assignment.

Accommodations for Students with Disabilities

The Office of Equal Opportunity Services provides support services for both

physically disabled and learning disabled students. For individualized

academic adjustment based on a documented disability, contact Equal Opportunity

Services at eos [at] andrew.cmu.edu or (412) 268-2012; they will coordinate

with me.

Schedule

SUBJECT TO CHANGE (with notice)

(The readings will be made specific at least one week before they're

assigned.)

- August 28 (Tuesday): Lecture 1, Introduction to the course

- Welcome; course mechanics; data distributed over space and time; goals and challenges; basic EDA by way of pictures

- Slides for lecture 1 (R Markdown source file)

- Homework 0: assignment; see above for this course's policy on cheating, collaboration and plagiarism; here

for CMU's policy on academic integrity; and on Piazza for the excerpt from Turabian's Manual for Writers

- Homework 1: assignment, kyoto.csv data file

- August 30 (Thursday): Lecture 2, Smoothing, Trends, Detrending I

- Smoothing by local averaging. The idea of a trend, and de-trending. Smoothing as EDA. Some of the math

of smoothing: the hat matrix, degrees of freedom. Expanding in eigenvectors.

- Notes for lectures 2 and 3 (Rmd source file)

- Reading: Eshel, chapter 7; Guttorp, introduction and chapter 1;

Turabian, excerpts (on Piazza)

- Optional reading: Karen Kafadar,

"Smoothing Geographical Data, Particularly Rates of Disease",

Statistics in Medicine 15 (1996): 2539--2560

- Homework 0 due

- September 4 (Tuesday): Lecture 3, Smothing, Trends, Detrending II

- The hat matrix as the source of all knowledge. Residuals after

de-trending as estimates of the fluctuations. The Yule-Slutsky effect.

Picking how much to smooth by cross-validation. Special considerations for ratios (Kafadar).

- Slides for lecture 3 (Rmd source file)

- Reading: Eshel, chapter 8

- September 6 (Thursday): Lecture 4, Principal Components I

- The goal of principal components: finding simpler, linear structure

in complicated, high-dimensional data. Math of principal components: linear

approximation -> preserving variance -> eigenproblem. Reminders from linear

algebra about eigenproblems. Mathematical solution to PCA. How to do PCA in

R.

- Slides (Rmd source)

- Reading: Eshel, chapter 4, and skim chapter 5

- Homework 1 due at 6 pm on Wednesday, September 5

- Homework 2: assignment; smoother.matrix.R for use in problem 1

- September 11 (Tuesday): Lecture 5, Principal Components II

- Brief recap on PCA. Applying PCA to multiple time series. Applying PCA to

spatial data. Applying PCA to spatio-temporal data. Interpreting PCA results.

Why PCA can be good exploratory analysis, but is not statistical inference.

Glimpses of some alternatives to PCA: independent component analysis, slow

feature analysis, etc.

- Slides (Rmd source)

- Reading: Eshel, chapter 11, sections 11.1--11.7 and 11.9--11.10 (i.e., skipping 11.8 and 11.11--11.12)

- September 13 (Thursday): No class

- Homework 2 due at noon on Thursday, September 13

- Homework 3: assignment;

soccomp.irep1.csv data

file; soccomp.csv data file.

- September 18 (Tuesday): Lecture 6, Optimal Linear Prediction

- Mathematics of prediction. Mathematics of optimal linear

prediction, in any context whatsoever. Ordinary least squares as an estimator

of the optimal linear predictor. Why we need the covariance functions.

- Slides (Rmd)

- Reading: Eshel, chapter 9, sections 9.1--9.3

- September 20 (Thursday): Lecture 7, Linear Interpolation and Extrapolation of Time Series

- Applying the linear-predictor idea to time series: interpolating

between observations; extrapolating into the future (or past). The concept of stationarity. Auto- and

cross- covariance. Covariance functions as EDA. Basic covariance estimation

in R.

- Slides (Rmd)

- Reading: Eshel, chapter 9, section 9.5 (skipping 9.5.3 and 9.5.4)

- Homework 3 due at 6:00 pm on Wednesday, September 19

- Homework 4: assignment

- September 25 (Tuesday): Lecture 8, Linear Interpolation and Extrapolation of Spatial and Spatio-Temporal Data

- Applying the linear-predictor idea to spatial or spatio-temporal

data ("kriging"): interpolating between observations, extrapolating into the

unobserved. More advanced covariance estimation in spatial contexts. Concepts

of stationarity, isotropy, separability, etc.

- Slides (Rmd)

- September 27 (Thursday): Lecture 9, Separating Signal and Noise with Linear Methods

- Applying the linear-predictor idea to remove observational

noise ("the Wiener filter"). Extracting periodic components and seasonalk

adjustment.

- Slides (Rmd)

- Homework 4 due at 6:00 pm on Wednesday, September 26

- Homework 5: assignment

- October 2 (Tuesday): Lecture 10, Fourier Methods I

- Decomposing time series into periodic signals, a.k.a. "going from

the time domain to the frequency domain", a.k.a. "spectral analysis". The

Fourier transform and the inverse Fourier transform. Fourier transform of a

time series. Fourier transform of an autocovariance function, a.k.a. "the

power spectrum". Wiener-Khinchin theorem. Interpreting the power spectrum;

hunting for periodic components. Estimating the power spectrum: the periodogram.

- Slides (Rmd)

- Reading: Eshel, section 4.3.2 and 9.5.4

- October 4 (Thursday): Lecture 11,

Fourier Methods II Midterm review

Recap on Fourier analysis. More on estimating the power spectrum:

smoothed periodograms. Frequency-domain covariance estimation. Spatial and

spatio-temporal Fourier transforms. More interpretation. Fourier analysis

vs. PCA. A brief glimpse of wavelets. Generating new time series from the

spectrum.

- Homework 5 due at 6 pm on Wednesday, 3 October

- Midterm exam: Assignment,

ccw.csv data-set

- October 9 (Tuesday): Guest lecture by Prof. Patrick Manning: "African Population and Migration: Statistical Estimates, 1650--1900"

- Reading: Handout distributed in class on 4 October [PDF]

- October 11 (Thursday): No class

- Midterm exam due at noon

- Homework 6: assignment

- October 16 (Tuesday): Lecture 12, Linear Generative Models for Time Series

- Linear generative models for random sequences: autoregressions.

Deterministic dynamical systems; more fun with eigenvalues and eigenvectors.

Stochastic aspects. Vector auto-regressions.

- Slides (Rmd)

- Handout: AR(p) vs. VAR(1) models

- Reading: Eshel, sections 9.5 and 9.7

- October 18 (Thursday): Lecture 13, Linear Generative Models for Spatial and Spatio-Temporal Data

- Simultaneous vs. conditional autoregressions for random fields.

The "Gibbs sampler" trick. Autoregressions for spatio-temporal processes.

- Slides (Rmd)

- Homework 6 due at 6:00 pm on Wednesday, October 17

- Homework 7: assignment,

sial.csv data file

- October 23 (Tuesday): Lecture 14, Statistical Inference with Dependent Data I

- Reminder: why maximum likelihood and Gaussian approximations work for IID data. Consistency from convergence (law of large numbers); Gaussian approximation from fluctuations (central limit theorem). The "sandwich covariance"

for general estimators. How these ideas carry

over to dependent data.

- Slides (.Rmd, pareto.R)

- Reading: Guttorp, Appendix A

- October 25 (Thursday): Lecture 15, Inference with Dependent Data II

- Ergodic theory, a.k.a. laws of large numbers for dependent data.

Basic ergodic theory for stochastic processes. Correlation times and effective

sample size. Inference with

autoregressions. Gestures at more advanced ergodic theory.

- Reading: N/A

- Slides (.Rmd)

- Homework 7 due at 6:00 pm on Wednesday, October 24

- Homework 8: Assignment,

data (=simulation) files demorun.csv

and remorun.csv

- October 30 (Tuesday), Lecture 16: CANCELLED

- No class meeting today.

- November 1 (Thursday): Lecture 17, Simulation

- General idea of simulating a statistical model. The "Monte Carlo

method": using simulation to compute probabilities, expected values, etc.

- Slides (.Rmd)

- Homework 8 due at 6:00 pm on Wednesday, October 31

- Homework 9

assigned canceled

- November 6 (Tuesday): Lecture 18, Simulation for Inference I: The Bootstrap

- The bootstrap principle: approximating the sample distribution by

simulating a good estimate of the data-generating distribution. Uncertainty

via model-based bootstraps. Uncertainty via resampling bootstraps for time

series and for spatial processes. Related ideas: "surrogate data" tests of null

hypotheses; ensemble forecasts.

- Slides (.Rmd)

- Reading: Shalizi, "The Bootstrap", American Scientist 98:3 (May-June 2010), 186--190

- November 8 (Thursday): Lecture 19, Simulation for Inference II: Matching Simulations to Data

- Reminder about estimation in general. The method of moments.

The method of simulated moments. Indirect inference. Some asymptotics.

- Slides (.Rmd)

- Homework 9

due at 6:00 pm on Wednesday, November 7 canceled

- Homework 10: assignment

- November 13 (Tuesday): Lecture 20, Markov Chains I

- Markov chains and the Markov property. Examples. Basic properties

of Markov chains; special kinds of chain. Yet more fun with eigenvalues

and eigenvectors. How one trajectory evolves vs. how a population evolves. Ergodicity and central limit

theorems. Markov chain Monte Carlo. Higher-order Markov chains and

related models.

- Slides (.Rmd)

- Reading: Guttorp, chapter 2, sections 2.1--2.6 (inclusive)

- Optional reading: Handouts on "Monte Carlo and Markov Chains" (especially Section 2), and "Markov Chain Monte Carlo" from stat. computing 2013

- November 15 (Thursday): Lecture 21, Compartment Models

- General idea of compartment models as a special kind of Markov

model. Applications in demography, epidemiology, sociology, chemistry, etc.

- Notes;

.Rnw source file for the notes

- Homework 10 due at 6:00 pm on Wednesday, November 14

- Homework 11: assignment;

ckm_nodes.csv data file;

ckm_network data file (only needed for the extra credit)

- November 20 (Tuesday): Information Theory and Optimal Prediction

- Because this is Thanksgiving week, this is an optional special-topics lecture.

- Information theory: entropy, mutual information, entropy rate,

information rate. Measuring prediction quality with entropy. Mathematical

construction of the prediction process. Optimality and Markov properties.

Sketch of how to make this work on data.

- Slides

- Homework 11 due at 6:00 pm on Tuesday, 20 November

- No new assignment this week --- enjoy Thanksgiving!

- November 27 (Tuesday): Lecture 22, Markov Chains II

- Likelihood inference for individual trajectories. Least-squares

inference for population data. Conditional density estimates for

continuous spaces. Model-checking.

- Reading: Guttorp, chapter 2, sections 2.7--2.9 (inclusive)

- Slides (.Rmd)

- Optional reading: Maximum Likelihood Estimation for Markov Chains handout from the (no longer taught) 36-462, 2009 (uses slightly different notation than we're doing)

- Homework 12: assignment,

dicty-seq-1.dat and

dicty-seq-2.dat data files

- November 29 (Thursday): Lecture 23, Markov Random Fields

- Markov models in space. Applications: ecology; image analysis. Spatio-temporal Markov models: general idea; cellular automata; interacting particle systems. Inference.

- Slides (.Rmd)

- Reading: Guttorp, chapter 4, omitting section 4.6, and skimming section 4.3

- December 4 (Tuesday): Lecture 24, State-Space or Hidden-Markov Models

- Markov dynamics + distorting or noisy observations = Non-Markov observations. Model formulation. Inference: E-M algorithm, Kalman

filter, particle filter, simulation-based methods. Spatio-temporal version: dynamic factor models.

- Notes (.Rmd)

- Reading: Guttorp, section 2.12

- December 6 (Thursday): Lecture 25, Nonlinear Models

- Using smoothing to estimate regression functions. Nonlinear

autoregressions. Examples. R implementations.

- Homework 12 due at 6:00 pm on Wednesday, December 5

- Final exam: Assignment, sial.csv

- December 14 (Friday): Final exam due at 10:30 am

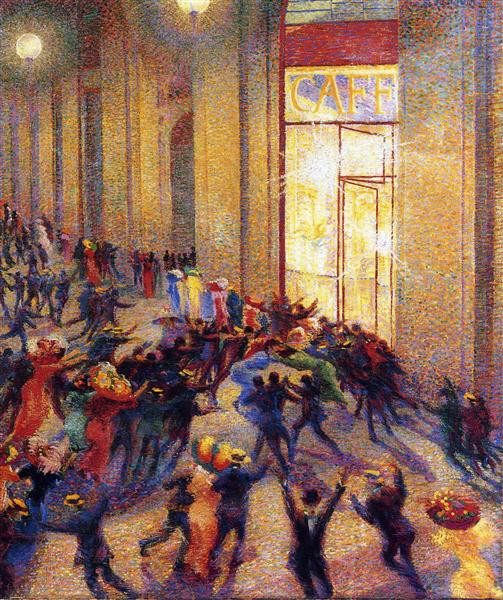

Image credit: Pictures on this page are from my teacher David

Griffeath's Particle Soup Kitchen

website, except for Umberto

Boccioni's Riot

in the Galleria.