- Homework 1 due

- Homework 2: assignment, helper code for problem 1

Lecture 5 (Tuesday, 15 September): Principal Components I

- The goal of principal components: finding simpler, linear structure in complicated, high-dimensional data. Math of principal components: linear approximation -> preserving variance -> eigenproblem. Reminders from linear algebra about eigenproblems. Mathematical solution to PCA. How to do PCA in R.

- Reading: Eshel, chapter 4, and skim chapter 5

- Slides (.Rmd)

Lecture 6 (Thursday, 17 September): Lecture 5, Principal Components II

- Brief recap on PCA. Applying PCA to spatial data. Applying PCA to multiple time series. Applying PCA to spatio-temporal data. Interpreting PCA results. Why PCA can be good exploratory analysis, but is not statistical inference.

- Reading: Eshel, chapter 11, sections 11.1--11.7 and 11.9--11.10 (i.e., skipping 11.8 and 11.11--11.12)

- Slides (.Rmd)

- Homework:

- Homework 2 due

- Homework 3: Assignment (with links to the data files)

Lecture 7 (Tuesday, 22 September): Optimal Linear Prediction

- Mathematics of prediction. Mathematics of optimal linear prediction, in any context whatsoever. Ordinary least squares as an estimator of the optimal linear predictor. Why we need the covariance functions.

- Reading: Eshel, chapter 9, sections 9.1--9.3

- Slides (.Rmd)

Lecture 8 (Thursday, 24 September): Linear Interpolation and Extrapolation of Time Series

- Applying the linear-predictor idea to time series: interpolating between observations; extrapolating into the future (or past). The concept of stationarity. Auto- and cross- covariance. Covariance functions as EDA. Basic covariance estimation in R. Removing trends; stationary fluctuations after detrending. Historical notes: Wiener and Kolmogorov.

- Reading: Eshel, chapter 9, section 9.5 (skipping 9.5.3 and 9.5.4)

- Slides (.Rmd, with comments on the sample code provided)

- Homework:

- Homework 3 due

- Homework 4: assignment

Lecture 9 (Tuesday, 29 September): Optimal Linear Prediction for Spatial and Spatio-Temporal Data

- Applying the linear-predictor idea to data spread over space or over space and time ("kriging"). The importance of estimating covariance between spatial locations. Assumptions restricting the form of the covariance and so enabling estimation: stationarity, isotropy, separability. Estimating parametric covariance functions. Examples.

- Reading: No required reading

- Slides (.Rmd)

Lecture 10 (Thursday, 1 October): Separating Signal and Noise with Linear Methods

- Observational noise: using the linear-predictor idea to remove observational noise, a.k.a. "the Wiener filter". The myseriously-named "nugget effect" (accounting for measurement noise that's not auto-correlated). Periodicity: noticing periodicity from time series; from autocorrelation functions. Extracting periodic components with a known period by averaging. "Climate" and "anomaly". Seasonal adjustment of time series.

- Reading:

- No required reading

- Optional reading:

- (****) Norbert Wiener, Extrapolation, Interpolation and Smoothing of Stationary Time-Series: with Engineering Applications (Cambridge, Massachusetts: The Technology Press, 1949 [but originally published as a classified technical report, National Defense Research Council, 1942])

- Slides (.Rmd)

- Homework:

- Homework 4 due

- Homework 5: assignment

Lecture 11 (Tuesday, 6 October): Linear Generative Models for Time Series

- Linear generative models for random sequences: autoregressions. Deterministic dynamical systems; more fun with eigenvalues and eigenvectors. Stochastic aspects. Vector auto-regressions.

- Reading:

- Eshel, sections 9.5 and 9.7

- Optional reading:

- (**) Judy L. Klein, Statistical Visions in Time: A History of Time Series Analysis, 1662--1938, especially Part II [This studies how linear regression, a method developed to adjust for differences across a population at a single time, came to be used to predict changes over time in a single quantity, which sounds weird when you put it that way]

- Slides (.Rmd)

- Handout: AR(p) vs. higher-dimensional VAR(1)

Lecture 12 (Thursday, 8 October): Linear Generative Models for Spatial and Spatio-Temporal Data

- Simultaneous vs. conditional autoregressions for random fields. The "Gibbs sampler" trick. Autoregressions for spatio-temporal processes.

- Reading: None

- Slides (.Rmd)

- Homework:

- Homework 5 due

- Homework 6: assignment

Lecture 13 (Tuesday, 13 October): Statistical Inference with Dependent Data I: Really Understanding Inference with Independent Data

- Reminder: why maximum likelihood and Gaussian approximations work for IID data. Consistency from convergence (law of large numbers); Gaussian approximation from fluctuations (central limit theorem). The "sandwich covariance" for general estimators. Looking ahead at how these ideas carry over to dependent data.

- Reading: Guttorp, Appendix A

- Slides (.Rmd)

Lecture 14 (Thursday, 15 October): Inference with Dependent Data II

- Ergodic theory, a.k.a. laws of large numbers for dependent data. Basic ergodic theory for stochastic processes. Correlation times and effective sample size. Inference with autoregressions. Gestures at more advanced ergodic theory. Likelihood-based inference for dependent data.

- Slides (.Rmd)

- Homework:

- Homework 6 due

- Homework 7: Assignment. Note: Because I messed up posting this on time, this is now due at the same time as HW 8, and will be extra credit, replacing your lowest grade on the other homeworks.

Lecture 15 (Tuesday, 20 October): Simulation

- General idea of simulating a statistical model. The "Monte Carlo method": using simulation to compute probabilities, expected values, etc.

- Slides (.Rmd)

Lecture 16 (Thursday, 22 October): Simulation for Inference I: The Bootstrap

- The bootstrap principle: approximating the sample distribution by simulating a good estimate of the data-generating distribution. Uncertainty via model-based bootstraps. Uncertainty via resampling bootstraps for time series and for spatial processes. Related ideas: "surrogate data" tests of null hypotheses; ensemble forecasts.

- Reading:

- CRS, "The Bootstrap", American Scientist 98:3 (May-June 2010), 186--190

- Optional readings:

- (*) Bradley Efron, "Bootstrap Methods: Another Look at the Jackknife", Annals of Statistics 7 (1979): 1--26

- (**) Peter Bühlmann, "Bootstraps for Time Series", Statistical Science 17 (2002): 52--72

- (***) S. N. Lahiri, Resampling Methods for Dependent Data (Berlin: Springer-Verlag, 2003)

- (***) Elizaveta Levina and Peter J. Bickel, "Texture synthesis and nonparametric resampling of random fields", Annals of Statistics 34 (2006): 1751--1773

- Slides (.Rmd)

- Homework:

Homework 7 due- Homework 8: assignment, lv.R

Lecture 17 (Tuesday, 27 October): Simulation for Inference II: Matching Simulations to Data

- Reminder about estimation in general. The method of moments. The method of simulated moments. "Indirect" inference: matching the parameters estimated from an "auxiliary" or "working" model. Some asymptotics.

- Optional reading:

- (*) Andrew Gelman and Cosma Rohilla Shalizi, "Philosophy and the Practice of Bayesian Statistics", British Journal of Mathematical and Statistical Psychology 66 (2013): 8--38, arxiv:1006.3868

- (**) Christian Gouriéroux, Alain Monfort and E. Renault, "Indirect Inference", Journal of Applied Econometrics 8 (1993): S85--S118 [JSTOR]

- (*) Brian D. Ripley, Spatial Statistics (New York: Wiley, 1981)

- Slides (.Rmd)

Lecture 18 (Thursday, 29 October): Markov Chains I

- Markov chains and the Markov property. Examples. Basic properties of Markov chains; special kinds of chain. Yet more fun with eigenvalues and eigenvectors. How one trajectory evolves vs. how a population evolves. Ergodicity and central limit theorems. Higher-order Markov chains and related models. Markov chain Monte Carlo.

- Reading:

- Guttorp, chapter 2, sections 2.1--2.6 (inclusive)

- Handouts on "Monte Carlo and Markov Chains" (especially Section 2), and "Markov Chain Monte Carlo" from stat. computing 2013

- Slides (.Rmd)

- Homework:

- Homework 7 due

- Homework 8 due

- Homework 9: Assignment, R file with new functions

Election Day (Tuesday, 3 November): NO CLASS

Lecture 19 (Thursday, 5 November):

Markov Chains II

- Likelihood inference for individual trajectories. Least-squares

inference for population data. Conditional density estimates for

continuous spaces. Model-checking.

- Reading:

- Guttorp, chapter 2, sections 2.7--2.9 (inclusive)

- Maximum Likelihood Estimation for Markov Chains handout from the (no longer taught) 36-462, 2009 (uses slightly different notation than we're doing)

- Slides (.Rmd)

- Homework:

- Homework 9 due

- Homework 10: assignment, helper code, dicty-seq-1.dat, dicty-seq-2.dat (WARNING: the data files are large!)

Lecture 20 (Tuesday, 10 November):

Epidemic Models

- The basic "susceptible-infectious-removed" (SIR) epidemic model. The

probability model and its deterministic limit. The idea of the "basic

reproductive number" R0 and how it relates to the rates of transmission and

removal. Why diseases do not necessarily evolve to be less lethal to their

hosts. The epidemic threshold when R0=1.

Complications: gaps between being infected and becoming infectious; the

possibility of being infectious without showing symptoms; re-infection.

Epidemics in social networks, and how network structure affects the epidemic

threshold; why high-degree people tend to be among the first infected, and

disease-control strategies based on "destroying the hubs". Statistical issues

in connecting epidemic models to data.

- Reading:

- Zeynep Tufekci, "Don’t Believe the COVID-19 Models: That’s not what they’re for", The Atlantic 2 April 2020

- Optional readings:

- (**) Mark E. J. Newman, "The spread of epidemic disease on networks",

Physical Review E 66 (2002): 016128, arxiv:cond-mat/0205009

- (*) Tom Britton, "Epidemic models on social networks -- with inference", arxiv:1908.05517

- (**) Romualdo Pastor-Satorras and Alessandro Vespignani, "Immunization of complex networks", Physical Review E 65 (2002): 036104,

arxiv:cond-mat/0107066

- (*) Lisa Sattenspiel (with contributions by Alun Lloyd),

The Geographic Spread of Infectious Diseases: Models and Applications (Princeton, New Jersey: Princeton University Press, 2009) [Full text access via JSTOR]

- Slides (.Rmd)

Lecture 21 (Thursday, 12 November):

Compartment Models

- General idea of compartment models as a special kind of Markov

model. Applications in demography, epidemiology, sociology, chemistry, etc.

- Reading: handout (.Rnw)

- Slides (.Rmd)

- Homework:

- Homework 10 due

- Homework 11: assignment,

ckm_nodes.csv data file,

ckm_network.dat data file (only needed for extra credit)

Lecture 22 (Tuesday, 17 November):

Markov Random Fields

- Markov models in space. The Gibbs-Markov equivalence. The Gibbs sampler

again. Examples with the Ising model. Inference. Spatio-temporal Markov models: general idea; cellular automata.

- Reading: Guttorp, chapter 4, omitting section 4.6, and skimming section 4.3

- Slides (.Rmd)

Lecture 23 (Thursday, 19 November):

State-Space or Hidden-Markov Models

- Markov dynamics + distorting or noisy observations = Non-Markov observations. Model formulation. Inference: E-M algorithm, Kalman

filter, particle filter, simulation-based methods. Spatio-temporal version: dynamic factor models.

- Reading: Guttorp, section 2.12

- Slides (.Rmd); the more detailed handout (.Rmd)

- Homework:

- Homework 11 due

- Homework 12: assignment, mt.s1.csv data file

NO CLASS on Tuesday, 24 November

- There was going to be an optional lecture on point processes, but it's become clear that there really won't be enough attendance to justify this. I'll still post the slides/notes, but we won't be meeting.

- Reading:

- Guttorp, ch. 5

- Alex Reinhart, "A Review of Self-Exciting Spatio-Temporal Point Processes and Their Applications", Statistical Science 33 (2018): 299--318, arxiv:1708.02647

- Brad Leun and Philip B. Stark, "Testing Earthquake Predictions",

pp. 302--315 in

Deborah Nolan and Terry Speed (eds.), Probability and Statistics: Essays in Honor of David A. Freedman (Beachwood, Ohio, USA: Institute of Mathematical Statistics, 2008)

- Extra-optional more advanced reading:

- (***) Seth Flaxman, Yee Whye Teh, and Dino Sejdinovic, "Poisson intensity estimation with reproducing kernels", AISTATS 2017, arxiv:1610.08623

- (*) Charles Loeffler and Seth Flaxman, "Is Gun Violence Contagious? A Spatiotemporal Test",

Journal of Quantitative Criminology 34 (2018): 999--1017,

arxiv:1611.06713

- (*) Alex Reinhart and Joel Greenhouse, "Self-exciting point processes with spatial covariates: modeling the dynamics of crime",

Journal of the Royal Statistical Society C 67 (2018): 1305--1329, arxiv:1708.03579

Thanksgiving Day (Thursday, 26 November): NO CLASS

Lecture 24 (Tuesday, 1 December):

Nonlinear Prediction I: Model-Agnostic Predictions

- Using smoothing to estimate regression functions. Nonlinear

autoregressions. Additive autoregressions. The "time-delay embedding" method

and the question of "how many lags?" When can we expect model-agnostic methods

to work?

- Readings (all advanced and optional):

- (*) Jianqing Fan and Qiwei Yao, Nonlinear Time Series: Nonparametric and Parametric Methods (Berlin: Springer-Verlag, 2003) [Full-text access via Springerlink]

- (*) Holger Kantz and Thomas Schreiber, Nonlinear Time Series Analysis (2nd edition, Cambridge, UK: Cambridge University Press, 2004)

- (**) Norman H. Packard, James P. Crutchfield, J. Doyne

Farmer and Robert S. Shaw, "Geometry from a Time Series",

Physical Review Letters 45 (1980): 712--716

- (***) Norbert Wiener, "Nonlinear Prediction and Dynamics",

vol. III, pp. 247--252 in

Jerzy Neyman (ed.), Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability (Berkeley: University of California Press, 1956)

- Slides (.Rmd)

Lecture 25 (Thursday, 3 December):

Nonlinear prediction II: Model-Reliant Predictions

- Estimating the parameters of a model. Estimating the state of a model.

Extrapolating the estimated state forward in time using the estimated

parameters. Ensemble-based forecasts to handle uncertainty. Issues with

extremes and "functional box-plots". The importance of model-checking.

- Slides (.Rmd); handout on propagation of error

- Reading: optional readings on the last page of the slides

- Homework:

- Homework 12 due

- Homework 13: assignment, ccw.csv data file

Lecture 26 (Tuesday, 8 December):

Regressions with Dependent Observations

- Reminders about why regression theory usually assumes observations are IID.

Situations where this breaks down: "panel" or "longitudinal" data and

correlations within a "unit" over time; correlations between countries or

regions in spatial cross-sections; correlations because of shared "ancestry".

Effects on linear regression: OLS is still unbiased but inefficient, and all

your inferential statistics are wrong. Solution: generalized least squares

would be efficient if we knew the covariance structure; ways of figuring out

the covariance without knowing it to start with. Some examples, and some

case studies in what goes wrong when we ignore these issues.

- Reading:

- Recent, fairly easy-to-read papers that highlight important issues:

- Morgan Kelly, "The Standard Errors of Persistence",

SSRN/3398303 (2019)

- Youjin Lee and Elizabeth L. Ogburn, "Testing for Network and Spatial Autocorrelation", pp. 91--104 in Naoki Masuda, Kwang-Il Goh, Tao Jia, Junichi Yamanoi and Hiroki Sayama (eds.), Proceedings of NetSci-X 2020: Sixth International Winter School and Conference on Network Science, arxiv:1710.03296

- Youjin Lee and Elizabeth L. Ogburn, "Network Dependence Can Lead to Spurious Associations and Invalid Inference", Journal of the American Statistical Association forthcoming (2020), arxiv:1908.00520

- Thomas B. Pepinsky, "On Whorfian Socioeconomics",

SSRN/3321347 (2019)

- Classic, harder-to-read papers about methods:

- (**) Peter Diggle, Kung-Yee Liang and Scott L. Zeger, Analysis of Longitudinal Data (Oxford: Oxford University Press, 1994) [This is actually pretty easy to read, if you have the time for a full-length book; the CMU library has electronic access]

- (***) Kung-Yee Liang and Scott L. Zeger, "Longitudinal data analysis using generalized linear models", Biometrika 73 (1986): 13--22

- (***) Scott L. Zeger and Kung‐Yee Liang, "An overview of methods for the analysis of longitudinal data", Statistics in Medicine 11 (1992): 1825--1839

- Slides (.Rmd)

Lecture 27 (Thursday, 10 December):

Causal Inference over Time

- What do statisticians mean by "causality"? What "Granger causality" is,

and why it's usually not interesting.

Graphical causal models.

Defining causal effects in terms of "surgery" on the graph. Graphical causal

models for variables evolving over time. Discovering the right graph,

assuming additive dependence.

- Reading: (**) Tianjiao Chu and Clark Glymour, "Search for Additive Nonlinear Time Series Causal Models", Journal of Machine Learning Research 9 (2008): 967--991

- Slides (.Rmd)

- Homework:

- Homework 13 due

Lecture 19 (Thursday, 5 November): Markov Chains II

- Likelihood inference for individual trajectories. Least-squares inference for population data. Conditional density estimates for continuous spaces. Model-checking.

- Reading:

- Guttorp, chapter 2, sections 2.7--2.9 (inclusive)

- Maximum Likelihood Estimation for Markov Chains handout from the (no longer taught) 36-462, 2009 (uses slightly different notation than we're doing)

- Slides (.Rmd)

- Homework:

- Homework 9 due

- Homework 10: assignment, helper code, dicty-seq-1.dat, dicty-seq-2.dat (WARNING: the data files are large!)

Lecture 20 (Tuesday, 10 November): Epidemic Models

- The basic "susceptible-infectious-removed" (SIR) epidemic model. The probability model and its deterministic limit. The idea of the "basic reproductive number" R0 and how it relates to the rates of transmission and removal. Why diseases do not necessarily evolve to be less lethal to their hosts. The epidemic threshold when R0=1. Complications: gaps between being infected and becoming infectious; the possibility of being infectious without showing symptoms; re-infection. Epidemics in social networks, and how network structure affects the epidemic threshold; why high-degree people tend to be among the first infected, and disease-control strategies based on "destroying the hubs". Statistical issues in connecting epidemic models to data.

- Reading:

- Zeynep Tufekci, "Don’t Believe the COVID-19 Models: That’s not what they’re for", The Atlantic 2 April 2020

- Optional readings:

- (**) Mark E. J. Newman, "The spread of epidemic disease on networks", Physical Review E 66 (2002): 016128, arxiv:cond-mat/0205009

- (*) Tom Britton, "Epidemic models on social networks -- with inference", arxiv:1908.05517

- (**) Romualdo Pastor-Satorras and Alessandro Vespignani, "Immunization of complex networks", Physical Review E 65 (2002): 036104, arxiv:cond-mat/0107066

- (*) Lisa Sattenspiel (with contributions by Alun Lloyd), The Geographic Spread of Infectious Diseases: Models and Applications (Princeton, New Jersey: Princeton University Press, 2009) [Full text access via JSTOR]

- Slides (.Rmd)

Lecture 21 (Thursday, 12 November): Compartment Models

- General idea of compartment models as a special kind of Markov model. Applications in demography, epidemiology, sociology, chemistry, etc.

- Reading: handout (.Rnw)

- Slides (.Rmd)

- Homework:

- Homework 10 due

- Homework 11: assignment, ckm_nodes.csv data file, ckm_network.dat data file (only needed for extra credit)

Lecture 22 (Tuesday, 17 November): Markov Random Fields

- Markov models in space. The Gibbs-Markov equivalence. The Gibbs sampler again. Examples with the Ising model. Inference. Spatio-temporal Markov models: general idea; cellular automata.

- Reading: Guttorp, chapter 4, omitting section 4.6, and skimming section 4.3

- Slides (.Rmd)

Lecture 23 (Thursday, 19 November): State-Space or Hidden-Markov Models

- Markov dynamics + distorting or noisy observations = Non-Markov observations. Model formulation. Inference: E-M algorithm, Kalman filter, particle filter, simulation-based methods. Spatio-temporal version: dynamic factor models.

- Reading: Guttorp, section 2.12

- Slides (.Rmd); the more detailed handout (.Rmd)

- Homework:

- Homework 11 due

- Homework 12: assignment, mt.s1.csv data file

NO CLASS on Tuesday, 24 November

- There was going to be an optional lecture on point processes, but it's become clear that there really won't be enough attendance to justify this. I'll still post the slides/notes, but we won't be meeting.

- Reading:

- Guttorp, ch. 5

- Alex Reinhart, "A Review of Self-Exciting Spatio-Temporal Point Processes and Their Applications", Statistical Science 33 (2018): 299--318, arxiv:1708.02647

- Brad Leun and Philip B. Stark, "Testing Earthquake Predictions", pp. 302--315 in Deborah Nolan and Terry Speed (eds.), Probability and Statistics: Essays in Honor of David A. Freedman (Beachwood, Ohio, USA: Institute of Mathematical Statistics, 2008)

- Extra-optional more advanced reading:

- (***) Seth Flaxman, Yee Whye Teh, and Dino Sejdinovic, "Poisson intensity estimation with reproducing kernels", AISTATS 2017, arxiv:1610.08623

- (*) Charles Loeffler and Seth Flaxman, "Is Gun Violence Contagious? A Spatiotemporal Test", Journal of Quantitative Criminology 34 (2018): 999--1017, arxiv:1611.06713

- (*) Alex Reinhart and Joel Greenhouse, "Self-exciting point processes with spatial covariates: modeling the dynamics of crime", Journal of the Royal Statistical Society C 67 (2018): 1305--1329, arxiv:1708.03579

Thanksgiving Day (Thursday, 26 November): NO CLASS

Lecture 24 (Tuesday, 1 December): Nonlinear Prediction I: Model-Agnostic Predictions

- Using smoothing to estimate regression functions. Nonlinear autoregressions. Additive autoregressions. The "time-delay embedding" method and the question of "how many lags?" When can we expect model-agnostic methods to work?

- Readings (all advanced and optional):

- (*) Jianqing Fan and Qiwei Yao, Nonlinear Time Series: Nonparametric and Parametric Methods (Berlin: Springer-Verlag, 2003) [Full-text access via Springerlink]

- (*) Holger Kantz and Thomas Schreiber, Nonlinear Time Series Analysis (2nd edition, Cambridge, UK: Cambridge University Press, 2004)

- (**) Norman H. Packard, James P. Crutchfield, J. Doyne Farmer and Robert S. Shaw, "Geometry from a Time Series", Physical Review Letters 45 (1980): 712--716

- (***) Norbert Wiener, "Nonlinear Prediction and Dynamics", vol. III, pp. 247--252 in Jerzy Neyman (ed.), Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability (Berkeley: University of California Press, 1956)

- Slides (.Rmd)

Lecture 25 (Thursday, 3 December): Nonlinear prediction II: Model-Reliant Predictions

- Estimating the parameters of a model. Estimating the state of a model. Extrapolating the estimated state forward in time using the estimated parameters. Ensemble-based forecasts to handle uncertainty. Issues with extremes and "functional box-plots". The importance of model-checking.

- Slides (.Rmd); handout on propagation of error

- Reading: optional readings on the last page of the slides

- Homework:

- Homework 12 due

- Homework 13: assignment, ccw.csv data file

Lecture 26 (Tuesday, 8 December): Regressions with Dependent Observations

- Reminders about why regression theory usually assumes observations are IID. Situations where this breaks down: "panel" or "longitudinal" data and correlations within a "unit" over time; correlations between countries or regions in spatial cross-sections; correlations because of shared "ancestry". Effects on linear regression: OLS is still unbiased but inefficient, and all your inferential statistics are wrong. Solution: generalized least squares would be efficient if we knew the covariance structure; ways of figuring out the covariance without knowing it to start with. Some examples, and some case studies in what goes wrong when we ignore these issues.

- Reading:

- Recent, fairly easy-to-read papers that highlight important issues:

- Morgan Kelly, "The Standard Errors of Persistence", SSRN/3398303 (2019)

- Youjin Lee and Elizabeth L. Ogburn, "Testing for Network and Spatial Autocorrelation", pp. 91--104 in Naoki Masuda, Kwang-Il Goh, Tao Jia, Junichi Yamanoi and Hiroki Sayama (eds.), Proceedings of NetSci-X 2020: Sixth International Winter School and Conference on Network Science, arxiv:1710.03296

- Youjin Lee and Elizabeth L. Ogburn, "Network Dependence Can Lead to Spurious Associations and Invalid Inference", Journal of the American Statistical Association forthcoming (2020), arxiv:1908.00520

- Thomas B. Pepinsky, "On Whorfian Socioeconomics", SSRN/3321347 (2019)

- Classic, harder-to-read papers about methods:

- (**) Peter Diggle, Kung-Yee Liang and Scott L. Zeger, Analysis of Longitudinal Data (Oxford: Oxford University Press, 1994) [This is actually pretty easy to read, if you have the time for a full-length book; the CMU library has electronic access]

- (***) Kung-Yee Liang and Scott L. Zeger, "Longitudinal data analysis using generalized linear models", Biometrika 73 (1986): 13--22

- (***) Scott L. Zeger and Kung‐Yee Liang, "An overview of methods for the analysis of longitudinal data", Statistics in Medicine 11 (1992): 1825--1839

- Recent, fairly easy-to-read papers that highlight important issues:

- Slides (.Rmd)

Lecture 27 (Thursday, 10 December): Causal Inference over Time

- What do statisticians mean by "causality"? What "Granger causality" is, and why it's usually not interesting. Graphical causal models. Defining causal effects in terms of "surgery" on the graph. Graphical causal models for variables evolving over time. Discovering the right graph, assuming additive dependence.

- Reading: (**) Tianjiao Chu and Clark Glymour, "Search for Additive Nonlinear Time Series Causal Models", Journal of Machine Learning Research 9 (2008): 967--991

- Slides (.Rmd)

- Homework:

- Homework 13 due

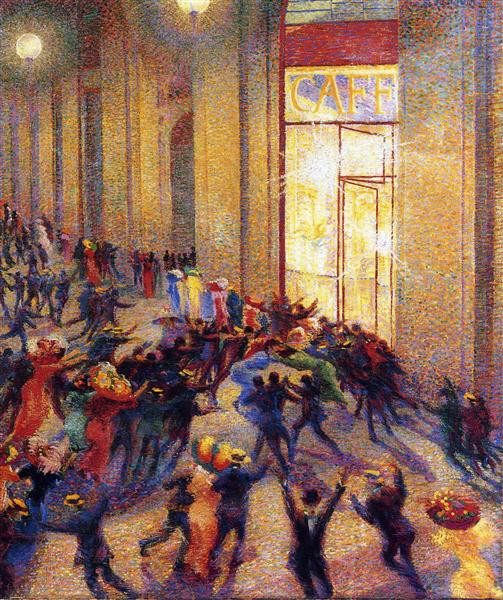

Image credit: Pictures on this page are from my teacher David Griffeath's Particle Soup Kitchen website, except for Umberto Boccioni's Riot in the Galleria.