Cosma Shalizi

Research

Interests: Nonparametric prediction of time

series; learning

theory and nonlinear

dynamics; information

theory; stochastic

automata, state space and

hidden Markov

models; causation

and

prediction; large

deviations

and ergodic

theory; neuroscience; statistical

mechanics

and self-organization; social

and complex

networks; heavy-tailed

distributions; collective cognition and distributed problem-solving.

(For my complete

papers, dissertation, CV,

selected presentations, etc., please see

my main research page. Potential

dissertation students should look at my list of on-going

and possible projects, but I will not be taking any new students until fall

2020 at the earliest. If you are not at CMU, by all means

apply to our graduate program.

I have no influence over admissions, and don't want any, so writing me about

that is a waste of your time. I have no openings for post-docs or other

employees. This includes self- or government- funded visitors.)

My work revolves around prediction and inference for dependent, and often

high-dimensional, data, drawing on tools from machine learning, nonlinear

dynamics and information theory.

My original training is in the statistical physics

of complex systems —

high-dimensional systems where the variables are strongly interdependent, but

cannot be effectively resolved into a single low-dimensional subspace. I

particularly worked with

symbolic

dynamics, and with

cellular

automata, which are spatial stochastic processes modeling pattern

formation, fluid

flow, magnetism

and distributed computation,

among other things. I remain interested in the role

of information theory and

statistical inference in the foundations of statistical mechanics, where I

think some of the conventional views have

things completely backwards

Much of my earlier work involves complexity measures,

like thermodynamic depth,

and especially

Grassberger-Crutchfield-Young

"statistical complexity", the amount of information about the past of a system

needed to optimally predict its future. This is related to the idea of a

minimal predictively-sufficient statistic, and in turn to the existence and

uniqueness of a predictively optimal Markovian representation for every

stochastic process, whether the original process is Markovian or not.

(Details.)

The same ideas also work

on spatially extended

systems, including those where space is an irregular graph or network, only

then the predictive representation is a Markov random field.

As a post-doc, I moved from the mathematics of optimal prediction to

devising algorithms to estimate such predictors from finite data, and applying

those algorithms to concrete problems. On the algorithmic

side, Kristina

Klinkner and I devised an

algorithm, CSSR, which exploits the

formal properties of the optimal predictive states

to efficiently reconstruct them

from discrete sequence data. (This is related to, but strictly more

powerful than, variable-length Markov chains or context trees.) Working with

Rob Haslinger, we also developed a reconstruction algorithm for spatio-temporal

random fields. We've used that to give

a quantitative test for

self-organization, and

to automatically filter

stochastic fields to identify their coherent structures

(with Jean-Baptiste

Rouquier and Cristopher

Moore). My student Georg

Goerg wrote his thesis in

this area, extending the technique to

continuous-valued fields and

a nonparametric EM algorithm. My

student George

Montañez worked on fast,

approximate algorithms for such prediction, before writing his thesis on

an information-theoretic

explanation for why machine learning works.

My more recent work falls into the areas of heavy tails, learning theory for

time series, Bayesian consistency, neuroscience, network analysis and causal

inference, with some overlap between these.

Heavy tailed distributions are produced by many complex systems,

and have attracted a lot of interest over recent decades.

My most-cited paper,

with Aaron Clauset

and Mark Newman, concerns

proper statistical inference for power law (Pareto, Zipf) distributions. I

have also worked on estimation

and testing for a modified class of Pareto distributions,

called q-exponential or Tsallis distributions, sometimes used in

statistical mechanics.

Learning theory: I collaborated

with Daniel McDonald

and Mark Schervish on extending

statistical learning theory to time series prediction, aiming

at reforming the evaluation of

macroeconomic forecasting. Steps along this way include the non-parametric

estimation of dependence coefficients

[i, ii], and

risk bounds for state-space models.

(I'm also interested in risk bounds without strong

mixing.) Separately, Aryeh

Kontorovich and I have worked on establishing the right notion of

predictive

probably-approximately-correct learning.

This is amenable to bootstrap bounds

on the generalization error (with Robert Lunde).

I am increasingly interested in

forecasting non-stationary processes, where I think the right goal is

to achieve low regret through a

growing ensemble of models (with Abigail Jacobs, Klinkner and Clauset).

Bayes: Bayesian inference is a smoothing or regularization device,

trading variance for bias, rather than a fundamental principle. This viewpoint

led to work with Andrew

Gelman on how the practice of

Bayesian data analysis relates to the philosophy of science. More

technically, I am interested in the frequentist properties of Bayesian methods,

especially the

convergence of non-parametric Bayesian

updating with mis-specified models and dependent data. Those results come

from an identity between Bayesian updating and the "replicator dynamic" of

evolutionary biology, of independent interest.

Neuroscience: One major application of CSSR has been to analyze the

computational structure of spike

trains (with Haslinger and Klinkner). One set of projects, with

Klinkner

and Marcelo

Camperi, uses the reconstructed states to build a noise-tolerant measure of

coordinated activity and

information sharing called "informational coherence". Informational

coherence, in turn, defines

functional modules of neurons with coordinated behavior, cutting across the

usual anatomical modules. In addition, I'm involved in more conventional

statistical modeling of neural signals, such as using multi-channel EEG data to

identify sleep anomalies (with

Matthew

Berryman), and analytic

approximations to traditional nonlinear state-estimation

(with Shinsuke

Koyama, Lucia Castellanos

and Rob Kass), applied to neural

decoding.

Networks and causal inference: My work on functional connectivity

and modularity is about extracting networks from coordinated behavior. (This,

in a way, was also what the Six

Degrees of Francis Bacon project was about.) In social systems, I am more

interested in the reverse problem, of how

network structure shapes collective

behavior. This has led me to explore,

with Alessandro Rinaldo, the

limits of exponential-family random

graphs (with implications for dependent exponential families generally);

and, in a very different direction, to work

with Henry Farrell on the role of

networks in institutional change. More

notoriously, Andrew Thomas and I have shown

that

causal inferences on networks are

generically confounded, though recently Edward McFowland and I have

found some loop-holes for

networks

where community

discovery works well.

Currently, much of my time goes into non-parametric network modeling,

looking at issues like how to map

nodes from a graph into a continuous latent space (with Dena Asta), how

to bootstrap random graphs (with

Alden Green), and how to model large, sparse networks (with Neil

Spencer). Ultimately, this line of work aims at

statistical comparison of

networks (with my former students Dena Asta and Lawrence Wang).

I am (slowly) writing a book on the statistical analysis of

complex systems models.

Teaching

Student: Whenever there is any question, one's mind is

confused. What is the matter?

Master Ts'ao-shan:

Kill, kill!

In fall 2018, I will teach 36-467 / 36-667, data over

space and time, an introduction to time series, spatial, and

spatio-temporal statistics.

In 2017--2018, I was on much-needed sabbatical leave.

In spring 2017, I taught 36-402, undergraduate advanced data

analysis for the sixth time. In fall 2016, I taught two new half-semester

courses on networks, one an introduction to statistical

network models (36-720), and the other an advanced

course on non-parametric network modeling (36-781).

In the past, I've taught 36-350, introduction to

statistical computing (including co-teaching with Andrew

Thomas and, originally, Vince Vu);

36-401, modern regression for undergraduates;

36-757

(the data analysis project class for Ph.D. students); co-taught

36-835 ("statistical modeling

journal club") with Rob Kass; the old 36-350, data mining;

36-490, undergraduate research, on my own and

with Brian Junker;

36-220, engineering statistics

and quality control; 36-462, "Chaos, complexity, and

inference"; 36-754, advanced stochastic processes; and

46-929,

financial time series analysis,

with Anthony Brockwell.

Following those links, you'll get a draft textbook for

undergraduate ADA, lecture notes for networks, linear regression and data

mining, and slides (with a few notes) for statistical computing and for

complexity and inference. The notes for stochastic processes turned into a

270-page book manuscript, under the working title

of Almost None of the Theory of Stochastic

Processes. (I am not so happy, in retrospect, with how I taught

220.) My old teaching page has my

other lecture notes, and teaching evaluations from graduate school.

Why are students today not successful? What is the trouble? The trouble lies in their lack of self-confidence. If you do not have enough self-confidence, you will busily submit yourself to all kinds of external conditions and transformations, and be enslaved and turned around by them and lose your freedom. But if you can stop the mind that seeks [those external conditions] in every instant of thought, you will then be no different from the old masters. — Master I-hsüan

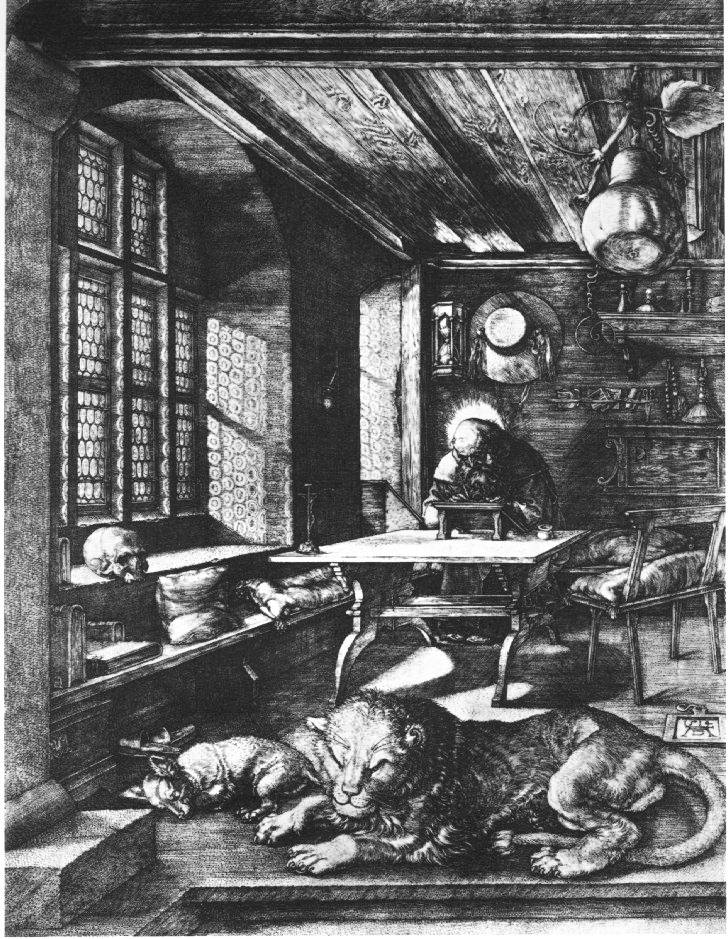

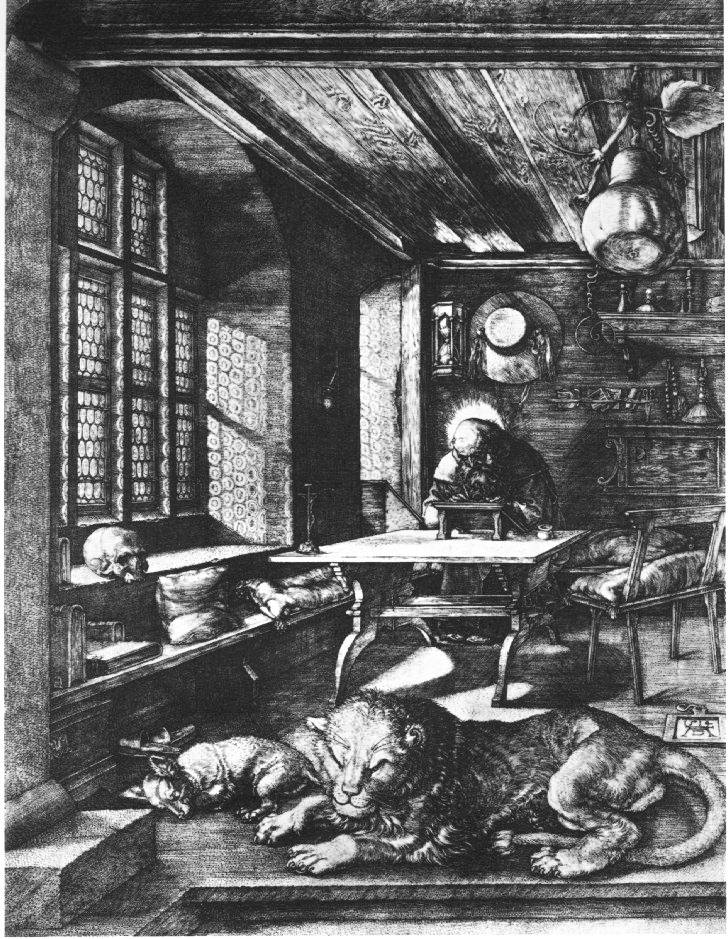

The scholar Zhong Kui, supported by his faithful

assistants, sets out to quell the demons of ignorance and banish the ghosts of

superstition. |

|

Office hours in fall 2018 are 1:00--3:00 for students in 36-467, or by appointment; please

look at my calendar first |